Microsoft Announces Steps to Tackling Intimate Image Abuse

The Code of Conduct for Microsoft Generative AI Services prohibits the creation of sexually explicit content.

Software company Microsoft has announced new steps to prevent the circulation of non-consensual intimate imagery (NCII). The company says it does not allow the sharing or creation of sexually intimate images of someone without their permission across its consumer services.

This includes photorealistic NCII content that was created or altered using technology. The company says it does not allow NCII to be distributed on its services and also prohibits any content that praises, supports, or requests NCII.

Additionally, Microsoft does not allow any threats to share or publish NCII — also called intimate extortion. This includes asking for or threatening a person to get money, images, or other valuable things in exchange for not making the NCII public.

In addition to this policy, the company has tailored prohibitions in place where relevant, such as for the Microsoft Store. The Code of Conduct for Microsoft Generative AI Services also prohibits the creation of sexually explicit content.

Microsoft says it will continue to remove content reported directly to it on a global basis, as well as where violative content is flagged to it by NGOs and other partners.

In search, it also continues to take a range of measures to demote low quality content and to elevate authoritative sources, while considering how it can further evolve its approach in response to expert and external feedback.

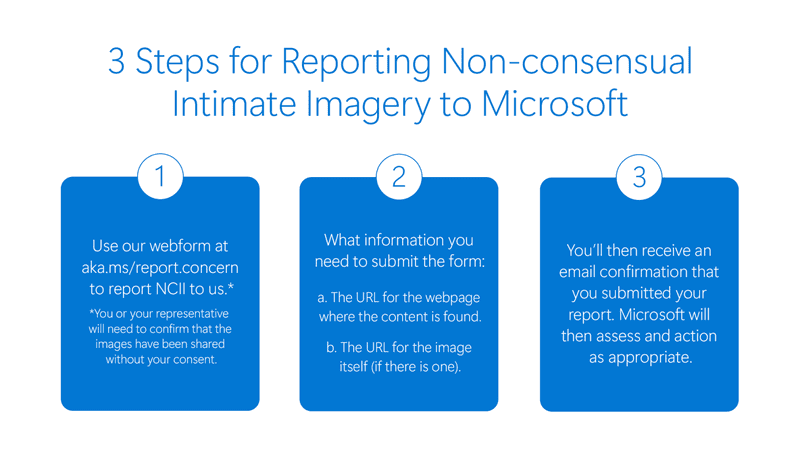

Anyone can request the removal of a nude or sexually explicit image or video of themselves which has been shared without their consent through Microsoft’s centralized reporting portal.

As with synthetic NCII, Microsoft will take steps to address any apparent child sexual exploitation and abuse (CSEA) content on its services, including by reporting to the National Center for Missing and Exploited Children (NCMEC).

Young people who are concerned about the release of their intimate imagery can also report to the NCMEC’s Take It Down service.

The company says it will continue to advocate for policy and legislative changes to deter bad actors and ensure justice for victims, while raising awareness of the impact on women and girls.

Courtesy: Microsoft